Optical Lens Attack on Monocular Depth Estimation based Autonomous Driving

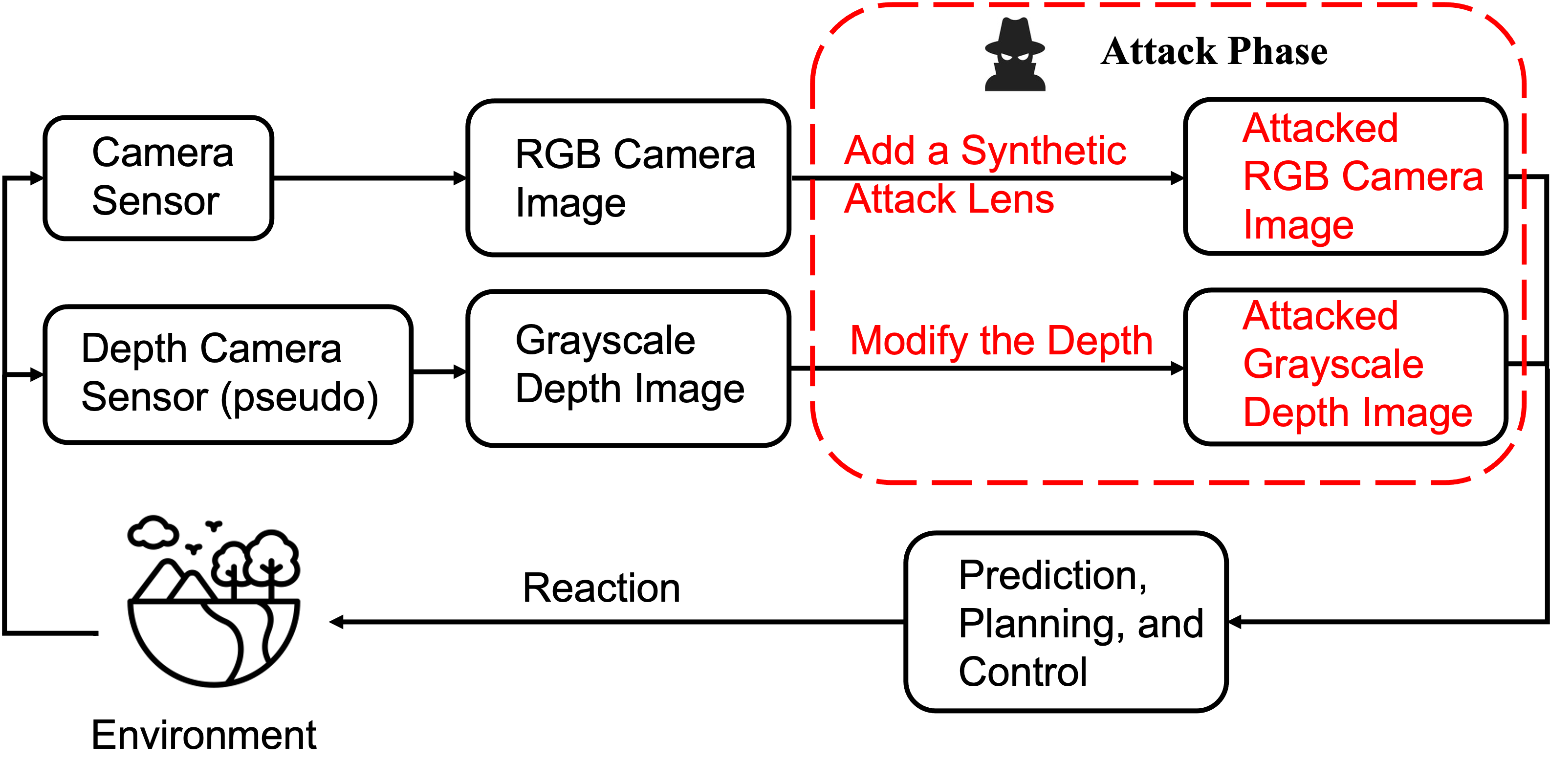

Monocular depth estimation plays a crucial role in vision-based autonomous driving (AD) systems (e.g., Tesla). It relies on a single camera image to estimate the object's depth, enabling driving decisions like stopping a few meters before a detected obstacle or changing lanes to avoid collisions. In this paper, we investigate the security risks associated with monocular vision-based depth estimation algorithms utilized in AD systems. By exploiting the vulnerability of monocular depth estimation and the principles of optical lenses, we introduce OpticalLensAttack, a physical-world attack that involves strategically placing optical lenses near the front of a car's camera to manipulate perceived object depth. OpticalLensAttack encompasses two distinct attack formats: concave lens attack and convex lens attack, each utilizing different optical lenses to induce false depth perception. We begin by constructing a mathematical model of our attack, incorporating various attack parameters. Subsequently, we simulate the attack in the digital world and assess its real-world performance in driving scenarios to showcase its impact on monocular depth estimation models. Additionally, we adopt an attack optimization method to enhance the attack accuracy. The results indicate potential security concerns associated with the proposed attacks on AD systems.

Attack Experimental Setup

Optical Lens Attack Demonstration

We present the real-world driving tests using concave lens attack and convex lens attack. We also showcase the potential attack effects, i.e., car accidents and traffic jams.

Attacks in Real World Driving Test

Physical Attacks

Concave Lens Attack

Convex Lens Attack

End-to-end System Simulation in CARLA

We conduct closed-loop simulations involving other integral components of autonomous driving, such as prediction, planning, and control using the CARLA simulator. CARLA is an open-source simulator designed to facilitate the advancement, training, and validation of autonomous driving systems.

Benign Autonomous Driving Scenario

The video demo shows the AV fully stops right behind the fire truck.

Autonomous Driving Scenario under Attack

The video demo shows that the AV crashes on the front car.

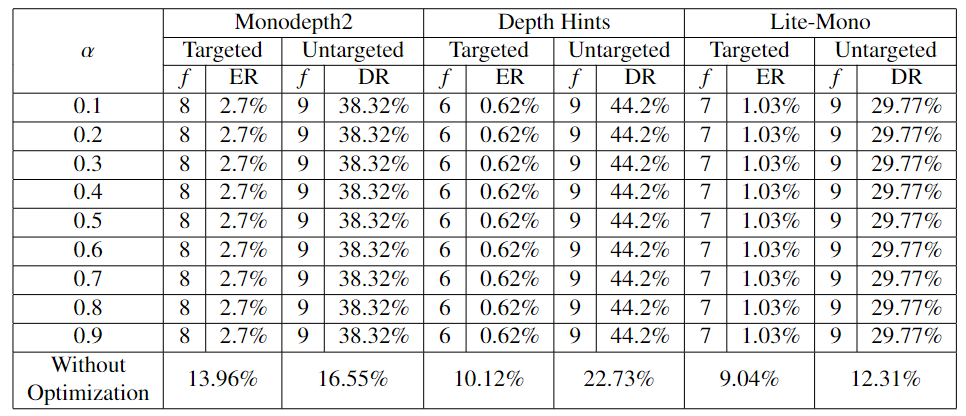

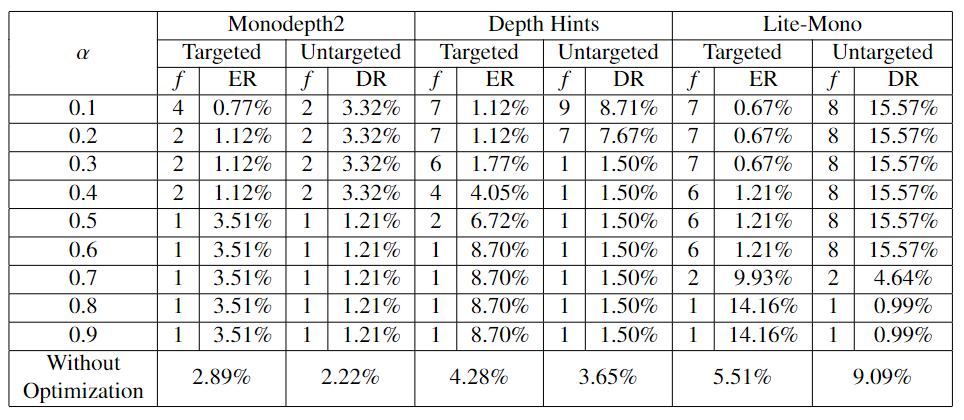

Attack Optimization

We present an attack optimization method that further improves the depth attack success rate by considering the factor of blurriness. The targeted attack error rate can be reduced by around 3%, and the untargeted attack distortion rate can be increased by around 10%.

Concave Attack Optimization

Convex Attack Optimization